Facebook already clear that AI want to handle more moderating tasks on the platform. Today, the corporate announced the newest steps towards that goal.

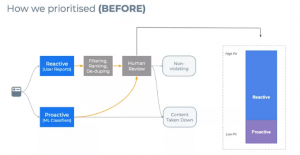

This is the way where moderators work on Facebook: Posts that appear to violate company rules (including everything from spam to malicious language to “beautify violence” content) are flagged by either the user or a machine learning filter. You can. Some very explicit cases are handled automatically (responses may include deleting posts, blocking accounts, etc.) and therefore the rest are queued for review by human moderators.

Facebook employs about 15,000 of those moderators worldwide and has been criticized for not providing sufficient support to those workers within the past and hiring them in situations that would cause trauma. I even have come. Their job is to categorize flagged posts and determine if they violate various company policies

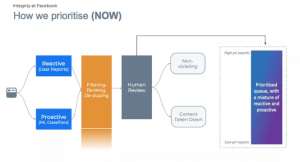

In the past, moderators reviewed posts more or less in chronological order and processed them within the order they were reported. Currently, Facebook says it wants its most vital posts to seem first, and is using machine learning to assist. within the future, we’ll use a mixture of various machine learning algorithms to sort this queue and prioritize posts supported three criteria: virility, severity, and potential rule violations.

It’s not clear how these criteria are weighted, but Facebook states that its goal is to process the foremost damaging posts first. Therefore, the more popular a post is during a review (the more it’s shared and viewed), the faster it’ll be processed. an equivalent is true for the severity of posts. Facebook states that it ranks posts with real-world harm because the most vital. this will mean content that has terrorism, child exploitation, or self-harm. On the opposite hand, posts that are annoying but not traumatic, like spam, are ranked as having the smallest amount importance of review.

Facebook shares details on how machine learning filters analyze posts within the past. These systems include a model named “WPIE”. This stands for “embedding the integrity of the whole post” and uses what Facebook calls the “overall” approach to rating content.

This means that the algorithm will work together to work out the various elements of a specific post and check out to know what images, captions, posters, etc. reveal together. If someone says they sell a “full batch” of “special sweets” with pictures that appear as if food, are they talking about rice krispies squares or edibles?

Facebook uses a spread of machine learning algorithms to sort content, including an “overall” rating tool called WPIE.

Facebook’s use of AI to moderate its platform has been scrutinized within the past. Critics means that AI lacks the human ability to gauge the context of the many online communications.

Facebook’s Chris Parlow, a member of the company’s moderator engineering team, agreed that AI has its limits, but told reporters that the technology could still play a task in removing unwanted content. It was. “This system aims to attach AI with human reviewers to scale back overall mistakes,” says Parlow. “AI will never be perfect.”

When asked about the share of posts that the company’s machine learning system misclassified, Parlow didn’t provides a direct answer, but Facebook said that if the automated system was as accurate as a person’s reviewer. Only said it might work without human supervision.